1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

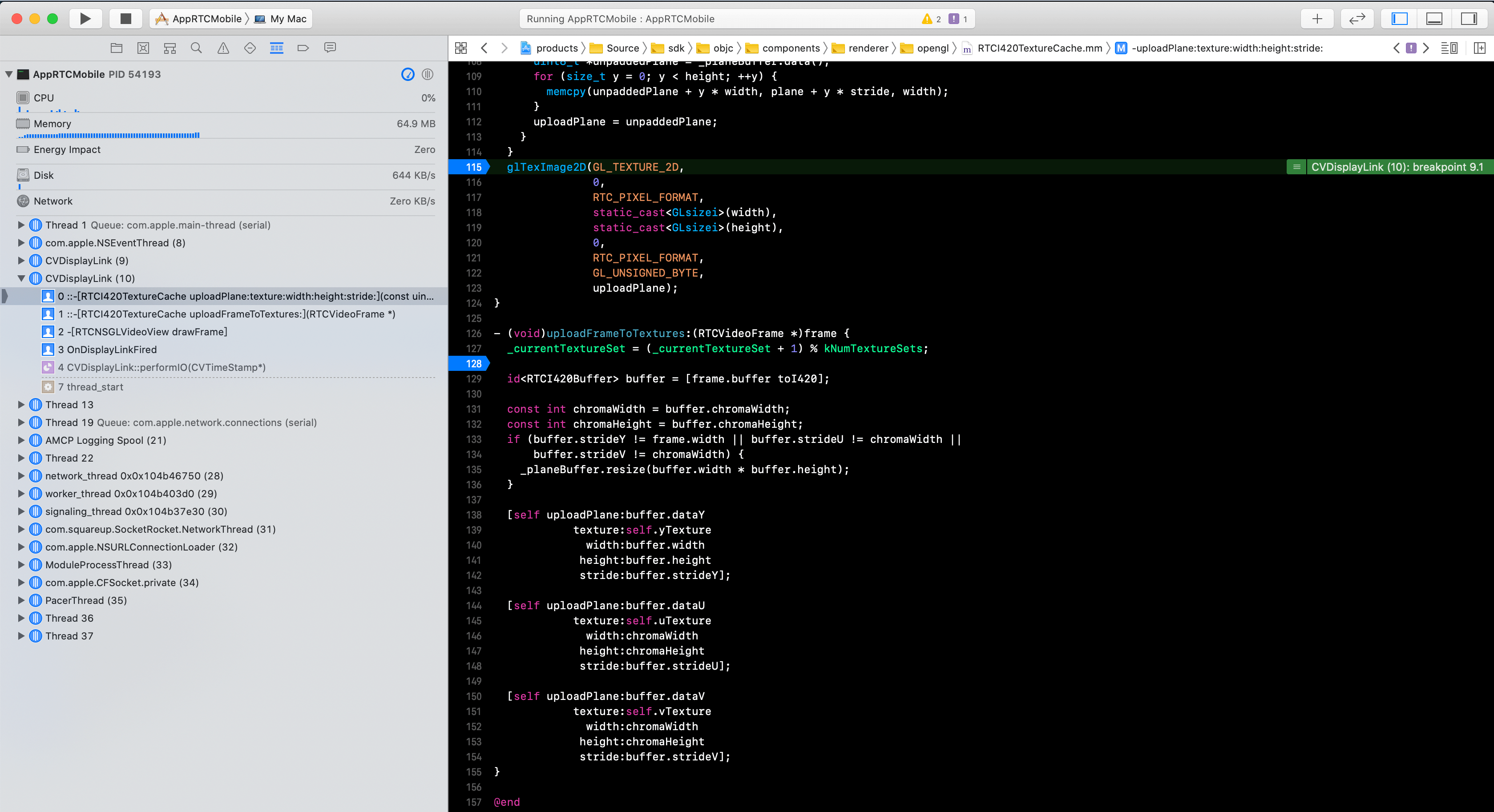

| - (void)uploadPlane:(const uint8_t *)plane

texture:(GLuint)texture

width:(size_t)width

height:(size_t)height

stride:(int32_t)stride {

glBindTexture(GL_TEXTURE_2D, texture);

const uint8_t *uploadPlane = plane;

if ((size_t)stride != width) {

if (_hasUnpackRowLength) {

glPixelStorei(GL_UNPACK_ROW_LENGTH, stride);

glTexImage2D(GL_TEXTURE_2D,

0,

RTC_PIXEL_FORMAT,

static_cast<GLsizei>(width),

static_cast<GLsizei>(height),

0,

RTC_PIXEL_FORMAT,

GL_UNSIGNED_BYTE,

uploadPlane);

glPixelStorei(GL_UNPACK_ROW_LENGTH, 0);

return;

} else {

uint8_t *unpaddedPlane = _planeBuffer.data();

for (size_t y = 0; y < height; ++y) {

memcpy(unpaddedPlane + y * width, plane + y * stride, width);

}

uploadPlane = unpaddedPlane;

}

}

glTexImage2D(GL_TEXTURE_2D,

0,

RTC_PIXEL_FORMAT,

static_cast<GLsizei>(width),

static_cast<GLsizei>(height),

0,

RTC_PIXEL_FORMAT,

GL_UNSIGNED_BYTE,

uploadPlane);

}

- (void)uploadFrameToTextures:(RTCVideoFrame *)frame {

_currentTextureSet = (_currentTextureSet + 1) % kNumTextureSets;

id<RTCI420Buffer> buffer = [frame.buffer toI420];

const int chromaWidth = buffer.chromaWidth;

const int chromaHeight = buffer.chromaHeight;

if (buffer.strideY != frame.width || buffer.strideU != chromaWidth ||

buffer.strideV != chromaWidth) {

_planeBuffer.resize(buffer.width * buffer.height);

}

[self uploadPlane:buffer.dataY

texture:self.yTexture

width:buffer.width

height:buffer.height

stride:buffer.strideY];

[self uploadPlane:buffer.dataU

texture:self.uTexture

width:chromaWidth

height:chromaHeight

stride:buffer.strideU];

[self uploadPlane:buffer.dataV

texture:self.vTexture

width:chromaWidth

height:chromaHeight

stride:buffer.strideV];

}

|